Towards Probabilistic AI Weather Forecasting

A Latent Diffusion Model Approach

As climate change drives increasingly frequent and severe weather events, the field of weather forecasting faces new challenges that demand innovative solutions. In our ongoing research, we’re exploring how latent diffusion models can transform weather prediction by combining accuracy with computational efficiency. This blog outlines our preliminary work on a latent diffusion model approach, sharing early results and our vision for the path forward.

[update: 2025-07-20] Check out our work on Latent Diffusion Models for ensemble weather forecasting, LaDCast.

Background & Challenges

SOTA data-driven models (as of 2025) such as Aurora (Microsoft) and GraphCast / GenCast (Google) have surpassed the traditional numerical weather prediction (NWP) models like IFS-ENS / IFS-HRES (ECMWF), yet some challenges remain for data-driven models to become mainstream (IMO, Aurora is the most production-ready model):

- Enormous storage requirements (hundreds of terabytes @ 0.25°x0.25° / 1440x721) make it costly for iterative research and development

- Few discussions on uncertainty quantification for capturing extreme weather events

- Reliance on ECMWF Reanalysis v5 (ERA5) dataset with its inherent 5-7 day latency (except for Aurora)

Clarification: Iterating through hundreds of terabytes of data for training one epoch is simply not feasible for most researchers. It would be even more challenging to increase the resolution to 0.1°x0.1° (or even higher) to capture more localized weather phenomena (e.g., predicting the intensity of hurricanes).

Most SOTA models are formulated as deterministic models, which require artificially perturbing the initial conditions to generate ensemble forecasts, and this could introduce biases. Even the diffusion-model based GenCast has minimal gain (~2%) over the deterministic mode when using perturbed initial conditions. In comparison, the IFS-ENS shows significant improvement (~25%) when evaluated using the ensemble mean relative to each trajectory that forms the ensemble.

The ERA5 dataset, as its name suggests, is a reanalysis dataset, which means it is generated by fusing observations and physical simulations. Although it is regarded as one of the most accurate reflections of past weather, the preview version of ERA5 has a latency of 5-7 days, which means the ML models trained on it will rely on NWP’s initialization and thus introduce biases.

Aims and Hypothesis

Our research explores a fundamentally different approach: compressing weather data and performing predictions in a latent space. We primarily aim to address the first two challenges mentioned above, namely, the storage requirements and uncertainty quantification. Due to the size of the data, we choose to utilize the low-resolution ERA5 dataset (1.5°x1.5° / 240x121, ~ 4TB) for our initial experiments, which is a downsampled version of the original ERA5 dataset (see WeatherBench2 (Google)). This is also the standard evaluation resolution at ECMWF (mentioned in the WB2 paper). Below are our hypotheses with justifications:

- Compression

- Models trained on different resolutions will have similar performance with proper scaling. - (Empirical observation)

- Models trained on lossy compression still deliver acceptable performance. - (Most SOTA models contain compressing-decompressing steps, some have residual/skipping connections for retaining high-frequency details, but effectively they are training the encoder-decoder along with the autoregressive model.)

- Probabilistic Forecasting

- Diffusion models are inherently probabilistic and can generate ensemble forecasts without the need for perturbing initial conditions. With proper setup, we can generate forecasts with high-frequency details that reflect the correct distribution. - (Our prior work on sparse reconstruction, DiffusionReconstruct, shows that diffusion models can generate ensembles of high-frequency reconstructions that converge to the deterministic output.)

Early Experiments and Results

Prior to switching to the latent diffusion model, we initially trained a full-space model (i.e., no compression) and found the performance was quite poor. Diffusion models are harder to train compared to other deterministic models. We then switched to the latent diffusion framework, and tested UNet and Transformer for the downstream autoregressive prediction part. We found the latter showed better performance.

In our preliminary explorations, we’ve developed a proof-of-concept using a CNN-based Variational Autoencoder on a 240×120 grid resolution (similar to Aurora, cropping out the south pole). This approach has achieved a compression ratio of 267× for nine weather variables (4 surface and 5 atmospheric) with 69 channels in total. Our latent diffusion model, trained on historical data from 1979 to 2015 and evaluated on 2019 data, shows encouraging results.

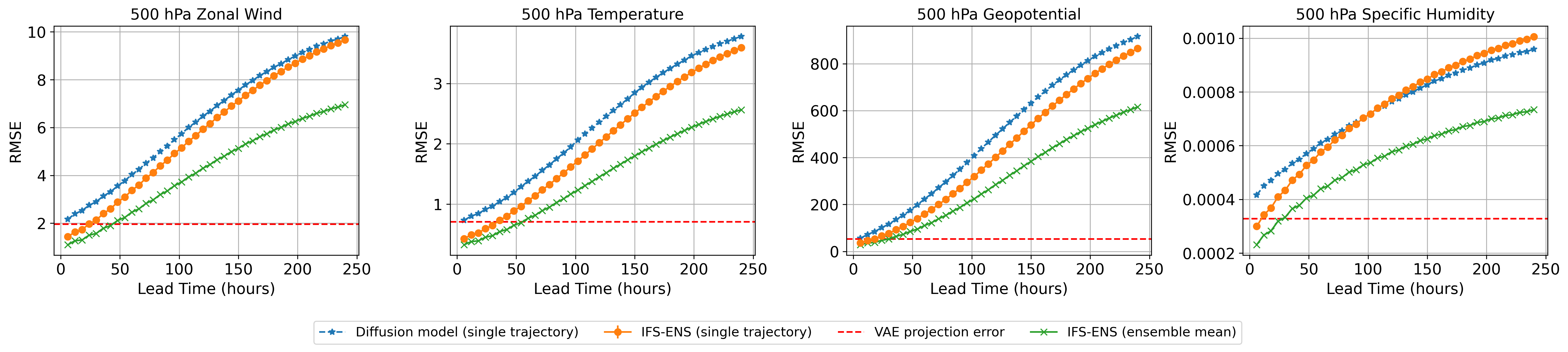

The single trajectory in the above figure denotes one single prediction instance. Our diffusion model is initialized from the deterministic ERA5 while the IFS-ENS is initialized from perturbed initial conditions. At the current stage, our model is slightly worse than the IFS-ENS if evaluated trajectory-wise, and there is quite some room for improvement to catch up with the IFS-ENS. In comparison, Google’s GenCast model slightly outperforms the IFS-ENS (ensemble mean).

Hurricane Lorenzo (2019): A Case Study

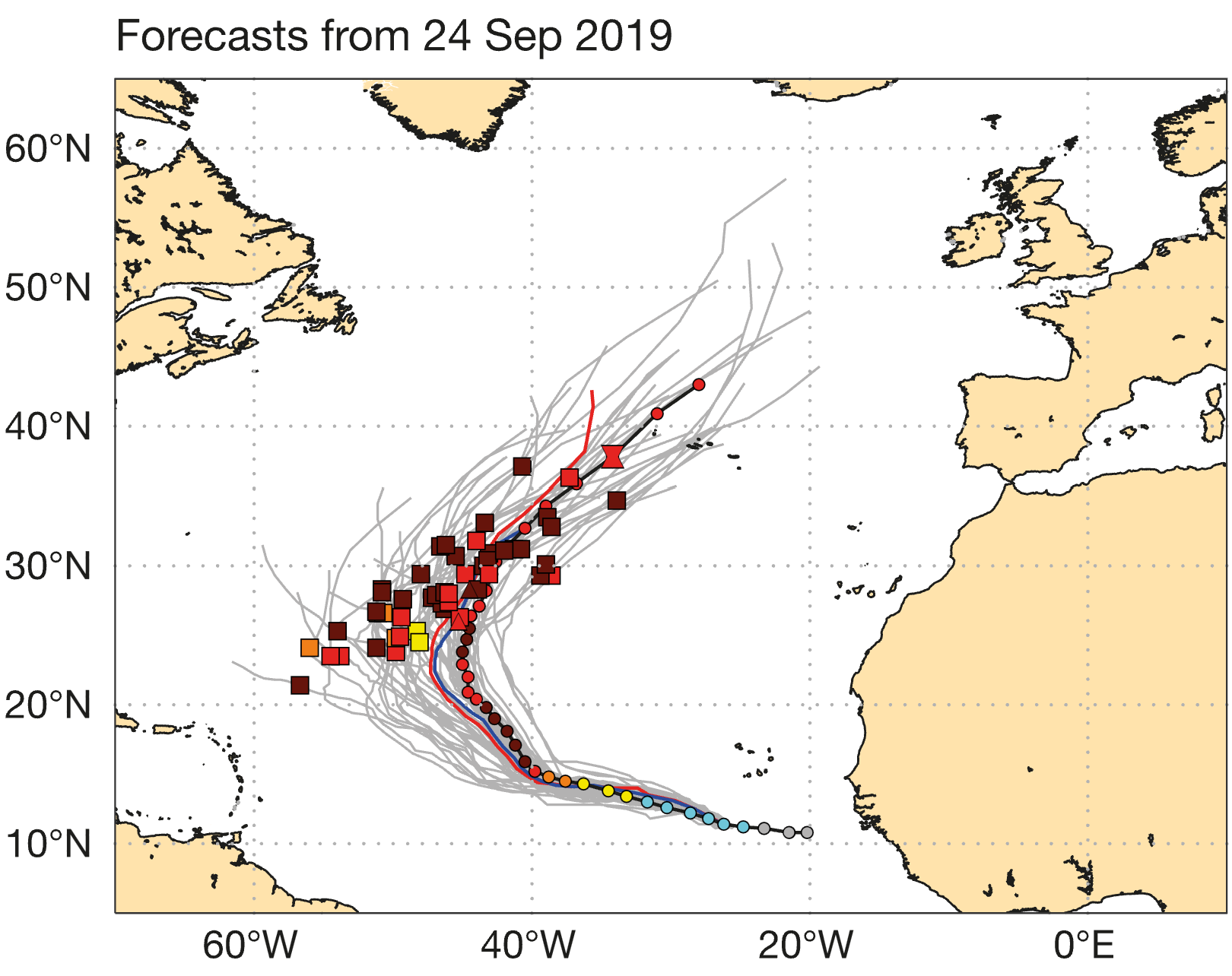

To evaluate our approach’s potential for tracking extreme weather events, we examined its performance on Hurricane Lorenzo. The bottom line of this section: The ground truth is within the possible realization captured by diffusion models. From the ECMWF newsletter:

“Lorenzo formed on 23 September south of Cape Verde. It reached its maximum intensity on 29 September as the easternmost Atlantic Category 5 storm on record.”

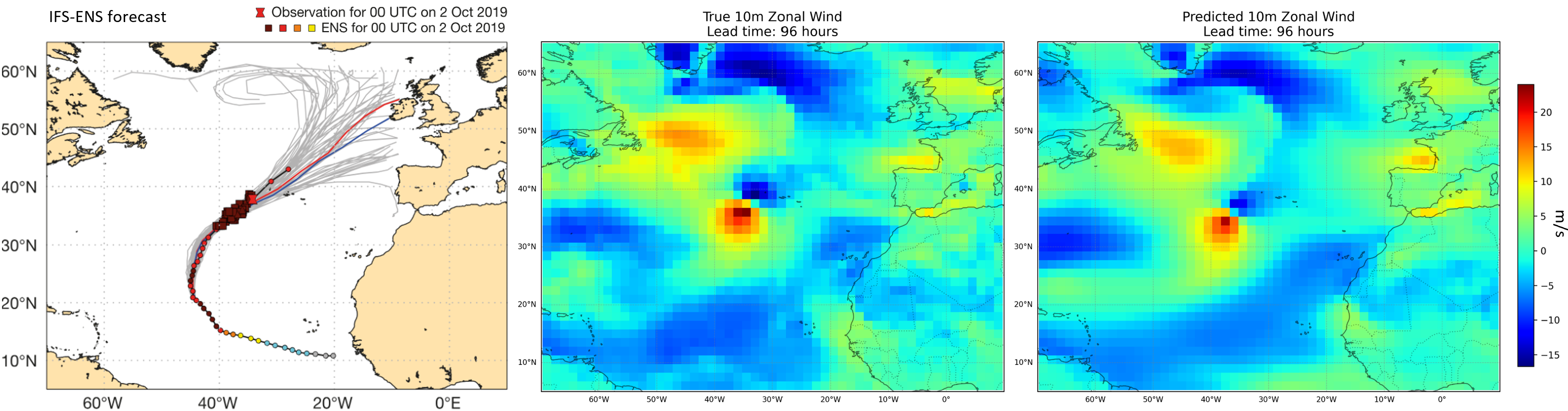

IFS-ENS:

“In the ensemble forecast from 28 September, there was good agreement between all ensemble members on a path towards the western Azores. However, here too we find that in most ensemble members the propagation speed was too slow and the predicted position of Lorenzo on 2 October was too far south compared to the corresponding observation.”

It can be seen in the above figure that the diffusion model handles the tracking of Lorenzo pretty well and does not underestimate the propagation speed. However, due to the limited resolution, the intensity of the hurricane is not captured well.

The animation shown above demonstrates a “cherry-picked” trajectory where the tracking of the hurricane generally matches the ground truth. It should be noted that there exist other predicted hurricane trajectories that drifted away from the ground truth. The main message here is that the ground truth is within the possible realizations captured by diffusion models.

Current Challenges and Next Steps

Our early results are encouraging, but substantial work remains. Some key challenges we’re addressing include:

-

Compression quality: The current bottleneck in our approach is the projection error from data compression, which is relatively large compared to newer SOTA models. We are working on an autoencoder-based approach with a lower compression ratio and lower projection error.

-

Architecture optimization: We’re exploring transformer-based encoding-decoding structures with unified embeddings for both atmospheric and surface variables.

-

Scaling to higher resolutions: Testing how our architectures adapt to higher grid resolutions with few-shot fine-tuning.

-

Real-time data assimilation: Developing methods to incorporate observations from diverse sources into the initial meteorological fields.

Acknowledgements

This work is supported by Los Alamos National Laboratory under the project “Algorithm/Software/Hardware Co-design for High Energy Density applications”